Find the PhD here

Background

Traditionally collected through in-person interviews, diary logs, and health questionnaires, self-reports of mental health and well-being are increasingly gathered through online tools, such as mobile and web apps, for their efficiency and accessibility (e.g., [1, 2]). Although these tools have become our primary mode of interaction with the technology and can support an efficient collection of self-reports in textual and visual forms, these are but one medium of interaction that can limit users' self-expression capacity. Research suggests that current self-report technologies can place a significant burden on users that could adversely affect their self-reporting experiences and challenge sustainable user engagement [3, 4]. In contrast, using speech as the mode of interaction, Conversational Agents (CAs) can engage users in more ‘natural' and human-to-human-like conversations and may offer the opportunity to obtain richer insights into mental health and wellbeing. CA’s intuitive mode of interaction can also foster more engaging and sustainable self-reporting experiences, which addresses the challenges of the current self-report technologies.

Objectives

Considering the potentials of CAs to support effective and engaging self-reporting experiences, this project investigated the feasibility of a CA for the self-report of mental health and well-being in terms of its (i) accuracy in capturing user responses, (ii) usability as experienced by the end-users, and (iii) capacity to sustain user engagement by conducting two empirical studies:

Study 1

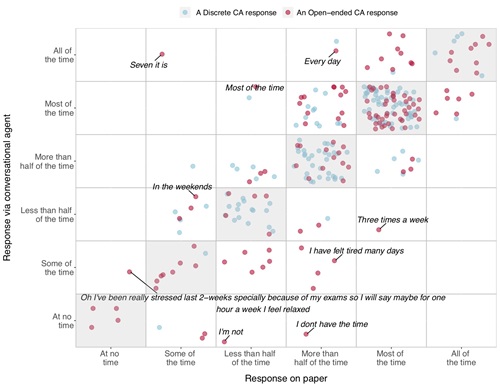

We designed an agent named Sofia with two distinct conversational designs: The first requiring discrete responses and the second allowing open-ended answers to the WHO-5 questionnaire. To evaluate the accuracy of the self-reports collected via the CA, we conducted a lab-based random assignment study contrasting 59 participant’s self-reports gathered from each of these CA designs and compared with the gold standard of the paper-based responses to the same questionnaire. As shown in Figure 1, results from the lab-based random assignment study demonstrated strong correlations between users' CA- and paper-based responses, suggesting that the CA can accurately capture self-reports of wellbeing data in a conversational setting [5].

Figure 1: The coherence between responses to the WHO-5 questionnaire as provided through Sofia and on paper [1]

Study 2

To investigate CA's usability and to compare users' engagement with a CA and a more traditional web-based method for self-reporting practices, a four-week two-arm field deployment study was conducted. The thematic analysis of the interview data from the 20 participants who used Sofia (v.2), enabling them to log their well-being daily by answering a series of three open-ended questions and additional responses to the WHO-5 questionnaire fortnightly, highlighted the need to address outstanding technical limitations and design challenges relating to conversational pattern matching, filling users' unmet interpersonal gaps, and the use of self-report CAs in the at-home social context. The comparative analysis of 22 participants’ engagement with either the CA or the web app that imitated the CA’s data entry features revealed that the CA is a more novel and attractive means of self-report than the traditional web-based method. Based on these findings, this thesis contributes initial evidence of the CA’s feasibility for the self-report of mental health and well-being.

References

- Jakob E. Bardram, Mads Frost, Károly Szántó, Maria Faurholt-Jepsen, Maj Vinberg, and Lars Vedel Kessing. 2013. Designing mobile health technology for bipolar disorder: a field trial of the monarca system. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '13). Association for Computing Machinery, New York, NY, USA, 2627–2636. DOI: https://doi.org/10.1145/2470654.2481364

- Darius A. Rohani, Andrea Quemada Lopategui, Nanna Tuxen, Maria Faurholt-Jepsen, Lars V. Kessing, and Jakob E. Bardram. 2020. MUBS: A Personalized Recommender System for Behavioral Activation in Mental Health. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI: https://doi.org/10.1145/3313831.3376879

- Doherty, Kevin, Andreas Balaskas, and Gavin Doherty. "The design of ecological momentary assessment technologies." Interacting with Computers 32.1 (2020): 257-278.

DOI: https://doi.org/10.1093/iwcomp/iwaa019

- Niels van Berkel, Denzil Ferreira, and Vassilis Kostakos. 2017. The Experience Sampling Method on Mobile Devices. ACM Comput. Surv. 50, 6, Article 93 (January 2018), 40 pages. DOI: https://doi.org/10.1145/3123988

- Raju Maharjan, Darius Adam Rohani, Per Bækgaard, Jakob Bardram, and Kevin Doherty. 2021. Can we talk? Design Implications for the Questionnaire-Driven Self-Report of Health and Wellbeing via Conversational Agent. In CUI 2021 - 3rd Conference on Conversational User Interfaces(CUI '21). Association for Computing Machinery, New York, NY, USA, Article 5, 1–11. DOI: https://doi.org/10.1145/3469595.3469600