About the Project

Tele-robots (e.g. telepresence robots) have the potential to improve life quality of immobile people (esp. while lying in a bed). Patients or other individuals with motor disabilities who cannot use their hands may use their eye-gaze to interact with the robots. By using gaze-controlled telepresence robots, they may be able to participate in events at geographically distant locations and they may be given the freedom to move around at their own will with gaze steering. However, previous studies on the system suggest that novice users need substantial practice for gaze steering of tele-robots with a head-mounted display. Simulators can be used as a cost-effective and safe solution for the acquisition of skills to operate a robot. VR-based simulation has been used extensively in robotic domains. Therefore, we have developed a VR-based simulator for training gaze control of such tele-robots. In this study, we aim to investigate the potential learning effects with the simulator.

Tasks for participants

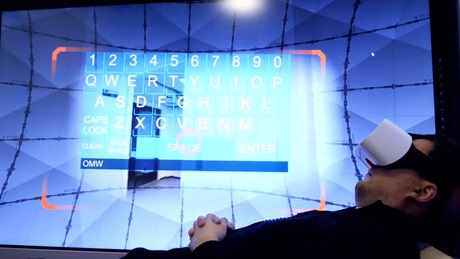

Gaze control using a VR headset with built-in eye trackers.

Duration

Around 50 minutes

Inclusion Criteria

Individuals irrespective of age, gender, race, religion or ethnical background, who can speak and read English.

Exclusion Criteria

Individuals who have already known that they might have VR sickness after continuous use of VR headset for more than 10 minutes.

Individuals who currently have health problems with their eyes.

Data

All data will be collected anonymously. The data stored is subject to the EU Data Protection Directive (GDPR).

Compensation

Each participant will receive a gift card for a variety of shops (worth DKK 100).

Informed Consent

Each participant is given a consent form before starting the test and is required to agree to the terms to continue.

Contact Information

For practical and technical questions, please contact Guangtao Zhang at guazha@dtu.dk.

References

Hansen, J. P., Alapetite, A., Thomsen, M., Wang, Z., Minakata, K., & Zhang, G. (2018). Head and gaze control of a telepresence robot with an HMD. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications (82: 1-3). ACM.

Zhang, G., Hansen, J. P., Minakata, K., Alapetite, A., & Wang, Z. (2019). Eye-Gaze-Controlled Telepresence Robots for People with Motor Disabilities. In 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (574-575). IEEE.

Zhang, G., Hansen, J. P., & Minakata, K. (2019). Hand-and gaze-control of telepresence robots. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications (70: 1-8). ACM.